The AI Tangle

A follow up to earlier thoughts

Note: This post is too long for email so please read in app or browser

I’ve written a couple of posts about AI before, basically that it hallucinates and is therefore inherently untrustworthy1 and that it’s a massive incinerator of cash2.

This post is a slightly more AI positive one in that I’m actually using some AI tools successfully and I’m far from alone in this. As I wrote in this note (and associated substack comment) OpenAI’s open-weight model gpt‑oss‑120b is giving me useful code that I can run and which takes minutes for the AI to create and for me to test as opposed to hours if I do it all myself. So I’m not a total AI is a waste of money doomster. But I’m not an AI is the future optimist either.

How to Use AI Successfully

I admit that I am somewhat echoing the findings of ESR as he Xeeted about his use of AI as a development tool:

I'm a software engineer.. I now use LLM assistance heavily. It's not like working with a programmer who's better than me, it's like working with a bright intern who doesn't have good design sense yet but has memorized all of the manuals. LLMs can exceed the human capacity to assemble large masses of facts, but they are deficient in higher-level judgment and taste.

If you treat your AI buddy as an eager intern, or (possibly racist, but not inaccurate IMHO) as Sanjay, your outsourced developer in Bangalore, then you’ll have a successful time using it. Write a proper detailed spec. Give it corrections when it gets it wrong. Very definitely give it sample expected output and use cases. Similar rules apply to using it for industry research, writing marketing stuff or other office activities.

Works well when under constant supervision and cornered like a rat in a trap.

Most critically you must have a way to validate the output that does not use the AI itself (or any other AI come to think of it). For example, in my coding usage I have explicit expected results and I can run the scripts and see that they produce the output I expect. In cases where I ask AI for, say, a summary of current news on topic X I always ask it to provide links as citations and I click on most of them to make sure that they say what the AI says they say - spoiler alert, they don’t always exist or say what AI says3.

Fundamentally LLM AI works as a multiplier that saves you from doing a lot of the drudgery and allows you to focus on the key executive issues. What that means is that you cannot trust it as much to develop new (to you) knowledge as you can to summarize existing knowledge or synthesize output from multiple known sources and/or put stuff in a standard format. You get better results from constraining the AI first with as many details as you can and asking it to make step by step improvements rather than doing everything at once.

Update: this Ars Technica article presents academic evidence that LLM AI really is unable to go beyond its training data to develop new thoughts. Not exactly a surprise but nice to have clear evidence

AI and the Employment Market

That leads to a problem though. The problem is that we no longer need interns, junior associates and people like Sanjay in Bangalore because AI is free or cheaper and probably faster. Now whether it is actually cheaper is something which we’ll debate below, but assume it is the case here. Given that these people are expensive, albeit cheaper than people with 5-10 years of real experience at whatever, that’s a short term win for the organization that uses AI to do their jobs.

However, while it is a short term win, it’s also a problem 5-10 years from now when we don’t have any people with 5-10 years of real experience because we didn’t hire them as interns and so they got a job doing something else - or worse failed to get any job at all because of no credentials and the AI driven mess that is current recruiting. Now it is possible that in 5-10 years that generation of AIs will be as good as people with 5-10 years experience but maybe not (update: see Ars T link above suggesting that they won’t).

And whether they are or aren’t, that doesn’t give jobs to the young people of today. Andrew Yang writes about this in more detail4:

I’m going to dwell on the latter, particularly its impact on entry-level white collar workers, otherwise known as recent college graduates. I had dinner with the CEO of a tech company last week. He said, “We just cut almost 20% of our workers, and chances are we’re going to be doing it again in the next year or two. There are a lot of efficiencies we are getting with AI. At the same time, my daughter is in college and she’s looking for a job. She’s trying to avoid tech because she sees what I’m doing. I’m not sure what her classmates are going to do for jobs in a few years.”

A partner at a prominent law firm told me, “AI is now doing work that used to be done by 1st to 3rd year associates. AI can generate a motion in an hour that might take an associate a week. And the work is better. Someone should tell the folks applying to law school right now.” I posted this quote on X and it went viral, getting 7 million views. Also this year, law school applications surged 21% - there’s a flight to safety, though in this case it’s not so safe. 3 years from now, how many lawyers are going to be getting hired?

A professor at a prestigious university shared, “For the first time I have alums calling me saying they’re still without a job, and they’re driving an Uber to make ends meet.”

And there’s a lot more later too that is worth reading.

Now, see my comments above regarding AI output, I am extremely skeptical that without human review “AI can generate a motion in an hour that might take an associate a week. And the work is better.”5 The reason for that is that we see repeated news stories where lawyers and now judges let AI do the writing and it turns out to be full of hallucinations. Mind you we only see in the news the cases where the errors are egregious and another lawyer cared enough to check; the tool I mention in footnote 3 might find all kinds of interesting, hitherto un-noticed errors in any number of recent filings and cases if we gave it a subscription to PACER. However, I also note that if AI usage has become common, then maybe most lawyers are taking the time to review and fix the hallucinations and the ones that we see that have hallucinations are the ones from the sloppy, rushed lawyers.

The same goes for all the other white collar jobs that AI can potentially replace. AI isn’t at the level where it can be trusted unsupervised, but I can quite well believe that a combination of AI plus a couple of junior associates checking the output is faster, cheaper and generally better than the current solution of say half a dozen to a dozen interns, junior associates and Sanjays.

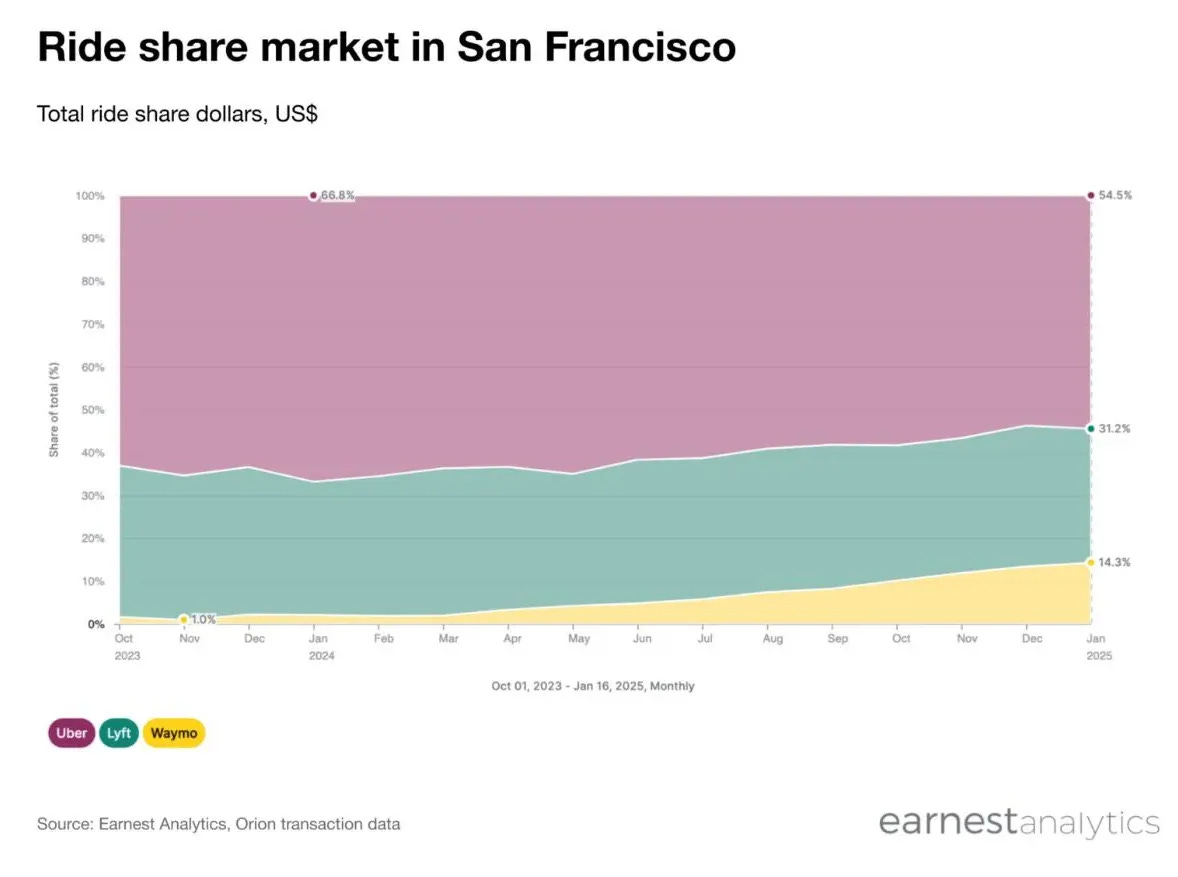

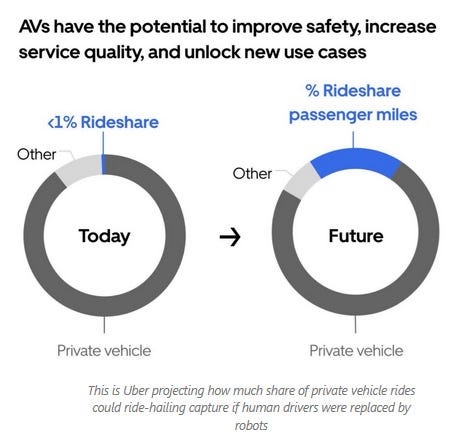

That’s bad news for the less capable would-be interns and juniors and extremely bad for Bangalore. We are already seeing problems in the lower end of the white collar job market because we have too many mediocre (if that) graduates chasing too few white collar jobs and I can’t see this getting better. Especially now that President Trump has more or less killed DEI and effectively allowed employers to test applicants on their capabilities, if you aren’t actually in the top 10-20% of the range you’re now on the hook for thousands of dollars of student loans and need to get a job at Starbucks or Uber to pay them off. Worse, bluntly those jobs are themselves at serious risk. Starbucks and other coffee shop barrista jobs are easy to automate - or automate enough that there’s one person where now there are five. If self-driving vehicles work as well as they appear to Uber, Lyft and for that matter almost all shortish distance public transport are dead. Tomas Pueyo has a couple of interesting graphs in his recent substack about robotaxis6:

and

I commented on Ed Christie’s post7 about Tomas’ post that:

If the price is right and Tesla priced robotaxis look like they could be there, they'll also disrupt all other forms of public transport too.

Say it costs me $5 for (a 8 mile) ride into town on the bus (light rail, tram whatever) and double that $10 for a self-driving taxi. It's going to be worth paying that $10 because the robotaxi will pick me up at my house and drive me to exactly where I want to go at the time (more or less) that I want. Regular public transport requires me to walk to the closest bus stop/station, walk from the closest one to my destination to the destination and is probably not that frequent. It's also almost certainly slower because the route is less direct and it has to stop. And of course a robotaxi is a lot safer because you won't be interacting with the drugged out nutter on the bus.

Put all that together and it's well worth paying a premium

Note that $1.20/mile is 60% of what Uber charges but is, if you read Tomas’ post, a plausible pricepoint for a profitable Tesla powered robotaxi fleet. That means that as well as the current Uber/Lyft and taxi drivers, much of the public transit market with all its expensive unionized work force is also at risk in a lot of places because, in addition to the convenience factor I mentioned, while it may cost riders less than $1.20/mile, the operational costs per passenger mile will often approach or exceed that number. Governments do a ton of stupid things to keep the unions happy but a city with enough robotaxis and a budget crunch may well decide to slash public transport to pay for the salaries and pensions of its other workers.

That’s the bad news for the domestic market.

It’s actually worse for the outsourcers. One huge problem India’s outsourcing industry faces is that it is annoying far around the world from it’s primary market - the USA. I’ve done work with outsourcers and it works OK if you can have clear milestones and objectives and predictable delivery schedules. In those cases you don’t need to talk to the outsourcer every day or more frequently. But even then, in cases where you are doing the QA locally (and you’d be an idiot if you outsourced that too), it is really helpful to have QA and engineering in the same timezone to promptly reproduce and fix issues. I’ve seen a ton of issues that get stuck in a multiday loop of “I tried doing X, couldn’t reproduce”, “Did you also do Y as I wrote in the report?” before the engineers can find the issue. If they’d been in the same timezone a couple of 5 minute phone calls or slack chats would have seen engineering reproduce the bug an hour after it was reported and (probably) fix it later that day.

Sure outsourcers try and fix this by making (some of) their employees work nights but I’m not sure that helps because the engineers working nights are generally tired and hence more error-prone. It gets even worse if you are doing “agile” devops stuff where the expectation is to roll out new features daily and just as bad for other sorts of outsourcing such as financial or HR admin work.

If the choice is to pay Tata for barely adequate work from a dozen Sanjays or have a local AI and a couple of local employees riding herd on it, then the latter is going to win because it isn’t just cheaper, it also provides a better, faster service. That is especially true in the current political environment of MAGA.

It is likely, in fact, that the need for local AI wranglers will partly offset the fall in demand for general entry level jobs and, ironically, the students who cheated by using AI to write their class work the best are likely to be the ones best suited to this job. Though all this assumes very much that AI is cheaper that people.

How Much Does it Cost?

That, however, is a question that we do not know the answer to. Right now AI companies are mostly giving away their product for free or far below cost. My coding experiments via huggingface are not costing me anything. All the students using ChatGPT and friends to write their classwork are using the free versions. Most businesses are doing the same too, which is an issue in that they are sharing potentially confidential data with AI companies that will use that data with other users, but isn’t helping the AI companies’ revenue. Some organizations are paying companies for their AI offerings but they don’t appear to be paying the actual rate those services cost the AI companies. In my last post I said it looks like AI companies lose roughly $1 for every $1 they get in revenue. That may actually be optimistic, Ed Zitron has another recent long post about how little money is being made by the LLM AI businesses as a whole and how even apparent success stories like Cursor are not making anything close to actual money. Grok, BTW, seems to in line to lose about $20 for every $1 of revenue this year.

But assume that AI companies can successfully raise prices from free or nearly free to some level that pays them real money. How much money can they get out of their customers? and how much do they need to make a good ROI on the money invested?

Let me work this backwards. If AI is to replace low end knowledge workers it needs to cost less than the people it is replacing. So if it is going to offer value, it’s replacing someone earning around, say, $20/hour for 40 hours for 50 weeks a year or annual salary equivalent, which is $40,000 - assume the taxes, healthcare and other benefits the employer pays are the cost difference(i.e. benefit) between AI getting the job and a human.

Great. How many people are we looking at? There are, roughly, 4 million university graduates in the US a year. Work on the estimate that one year of all those graduates are the ones whose jobs can be replaced by LLM AI. Obviously not all graduates go into AI replaceable jobs, but then low level employees are more than just the first year graduates. Looked at another way 4 million is about 2-3% of the entire US civilian labor market. It’s hard to see how AI directly replaces more than that so 4M seems like a plausible maximum number for salaries that can be used to pay for AI.

That means AI has a potential maximum annual revenue on the order of 40,000 * 4M = $160,000 M = $160B. Do some jiggling and we can see that number be as high as $200B or as low as $125B, but as a back of the envelope calculation $160B seems reasonable. If the AI companies can capture that amount of revenue then they have a viable business as it will allow them to not just pay for the operating costs of the server farms but also pay back a fair chunk of the CAPEX and R&D costs, and in a few years provide a positive ROI to investors.

But it hinges on the “if”. If the number of average $40k salary jobs AI can replace is only 1 million then AI revenues are limited to around $40B annually. $40B is probably barely enough to cover OPEX and the depreciation part of the CAPEX. I assume that a large proportion of data center spend is GPUs and the like which will last no more than 5 years before they need replacing and I’m probably being generous and they need replacing quicker.

In my incinerating cash post I calculated that LLM AI as a whole needed to generate positive cashflow on the order of Google ($100B/year) to achieve a reasonable timely ROI on the amount invested plus that intended to be invested in the next year or two. So if $40B / 1M salaries is the basic cost of operating the AI data centers plus the share of depreciation, which I think is reasonable, then that means the additional $100B a year has to come from additional jobs being replaced and $100B is 2.5M such salaries.

That is to say for LLM AI to provide a reasonable ROI then in the next couple of years they need to convince employers to entirely replace roughly 3.5M lower end jobs and pay for AI to do that work instead. Now admittedly not all those jobs have to come from the US (see Sanjay, but also see employers in Europe and Asia also adopting AI), but probably most of them have to.

Look at it slightly differently. LLM AI companies need to generate between $120B and $150B a year to cover costs and make a decent return. Total annual wages for the US are on the order of $11T or so. AI companies have to take around 1-1.5% of that amount. That is theoretically possible. But it’s a stretch and probably not something that is going to happen in the next year or two. For reference, total revenue for 2025 is likely to be no more than $35B and probably less - quite possibly it will be half that.

It’s an even bigger one given current pricing, where they spend at least $2 for each $1 they receive. AI companies need to at least double their income from 2025 levels just to break even and, to make a decent ROI, the industry as a whole needs quadruple that number or more.

Other Large Revenue Sources Are Not Apparent

OK so maybe they can simply get to $40B/year from raising prices to corporate customers a bit and increasing the numbers of them. That gets them to very basic break even on opex. They need to raise another $100B using other ways. Well what other ways?

How about academia? Let’s assume they can sell a $10/month, or $100/year with annual discount, subscription to every student in US secondary and tertiary education as well as all the teachers and professors. What does that get them?

ChatGPT told me (with links) that there are roughly 29M students in grades 6-12 and another 19M in tertiary education including grad students. That’s a total of close to 50M. Add teachers and professors and you increase that by no more than 10% - admin staff are obviously more but I’m not counting those.

50M * $100/year = $5B.

Even if you manage to charge the ~5M grad students and ~5M teachers/professors double the $100 you don’t move the needle much. $5-$6B seems like about as much as you can plausibly extract from the education sector. You can’t get the sector to replace its administrators so there’s no money coming from that budget and pressure is on to cut admin budgets so they probably can’t get a budget increase to pay for AI.

Now obviously there’s the education sector outside the US as well. Assume students in Europe and Japan, Korea, Australia etc. pay a similar amount and you’re probably stretching to get another $5B. For reasons that I trust are obvious, the rest of the world including West Taiwan and the Indian subcontinent are not going to pay $100/student or even close.

So the global education market is probably topped out at $10B/year for the US AI LLM companies. That’s roughly 10% of the money they need.

I can see individual subscriptions by artists and other creative self-employed kicking in another $10B or so at $100-$200 / user / year subscriptions. Would I personally spend that? No. But I know quite a few people with MidJourney subs as well as people who have Xitter subs who might be induced to pay for a higher tier of Xitter that included better Grok access. It seems hard to imagine that there are more than 50M such people in the US and another 50M outside so we’ll put that down as another 10%

We have 80% left.

Cisco’s Webex product (a slack equivalent) has AI features like meting transcription and summarization that presumably cost a bit. Meta has “helpfully” stuck an AI agent into FB Messenger chats and maybe they have a way to convinve advertisers to pay for that somehow. There are support chatbots (e.g. the substack one) and sales assistants that probably don’t directly take jobs away from actual employees but can be charged for so those are in addition to the $40B/year of current commercial AI usage. Stuff all that plus translation, image recognition and so on and maybe there’s another $10B in total revenue here. Maybe.

70% left

And I’m struggling.

Put it all together and I can see LLM AI in the near future generating around $70B/year. It needs to generate double that.

The good news from this is that AI probably isn’t going to totally crater the jobs market directly. The bad news is that it may crater the financial markets because it’s sucked up an unsustainable amount of capital (at least $250B) that is not going to get paid back.

LLM Considered Harmful

Someone once said “Logic is an organized way to go wrong with confidence.” LLM AI looks at logic and metaphorically says “Hold my beer and watch this”. Brad Delong has a post about ChatGPT where he tried to get it to give him the ISBN for a book and this goes horribly wrong

AI = Actively Incinerating Cash?

Note: Substack tells me this is too long for email. Please read in a browser or the app

Come to think of it, there’s probably a tool to be written using AI as developer that pulls the all the cited links in a document and searches in them for the quoted text.

For more on the legal pitfalls and prospects regarding AI see this substack

Humans cannot generate flawless output on one pass either. That’s why we have the concept of the draft. The lawyers who submitted LLM output without reviewing it are likely the type of bad lawyer who would submit an articling student’s first draft without reading it either.

Maybe a smaller work force is a ̶g̶o̶o̶d̶ ̶ great thing. Perhaps the world were families included a breadwinner and a homemaker wasn't so bad.

I queried Grok concerning percentage of women in the work force;

"1925: Data from the U.S. Census Bureau indicates that approximately 20% of the U.S. workforce was female in 1920, with similar figures likely for 1925. Women were primarily employed in roles like domestic service, clerical work, and manufacturing, with limited access to professional occupations.

2025: According to the U.S. Bureau of Labor Statistics, in July 2025, women made up about 47% of the U.S. workforce. This reflects a significant increase over the century, driven by societal changes, increased education, and policy shifts."

Since this is trivial I didn't double check if Grock's lying but (Data sources noted in Grok's reply.) it'd be easy to do so.

No, the honorable trade of homemakeing did not mean a world of barefoot and pregnant drudges. A. D.... (After Da advent of fridges, vacuums and washing machines.) the lady of the house had time and means for an active and satisfying life, social and otherwise.

Maybe, just maybe less work and more play just might bring Jack and Jill a lot more joy.

BTW: Anchorage/lower forty eight; For may years many of us here in Alaska kind of thought of it that way, we'd often refer to it as north Seattle, a large city only twenty minutes away from Alaska. ;-)